Data preprocessing is essential in preparing data for analysis or machine learning models. It involves transforming raw data into a suitable format for further analysis or modeling. The motive of data preprocessing tools is to clean and transform the data so that it can be easy to understand and analyze.

Also Read: Top 12 Best Accounting Software Used By Businesses

Table of Contents

Why is data preprocessing necessary?

Data preprocessing is necessary for several reasons:

- Improving data quality: Raw data often contain errors, missing values, and inconsistencies that can impact the accuracy and reliability of any analysis or modeling. Data preprocessing can help to clean and correct these issues, leading to higher-quality data.

- Enhancing data analysis: Data preprocessing can help transform data into a suitable format. For example, transforming categorical data into numerical data can enable the use of statistical methods that require numerical input.

- Improving model performance: Machine learning models are sensitive to the quality and format of the input data. Data preprocessing prepares the data that improve the model’s performance, such as by reducing noise or standardizing the data.

- Reducing computational requirements: Preprocessing data can help reduce the data’s size and complexity, leading to faster and more efficient analysis or modeling.

Top Data Preprocessing Tools

Pandas

Pandas is an open-source data manipulation and analysis library. It provides data structures for efficiently storing and manipulating large datasets and tools for data cleaning, transformation, and analysis. It is freely available under the BSD 3-clause license. It has a large community and library ecosystem and is widely used in academic and industry settings.

Features

- Data structures: It provides two main data structures Series (for one-dimensional arrays) and DataFrame (for two-dimensional arrays) that manipulate and analyze large datasets.

- Data cleaning: Pandas provides several tools for cleaning data, including handling missing values, filtering data, and removing duplicates.

- Data transformation: Pandas provides several tools for transforming data, including merging and joining datasets, reshaping data, and applying functions to data.

- Data analysis: Pandas provides several tools for analyzing data, including statistical methods, data visualization, and time series analysis.

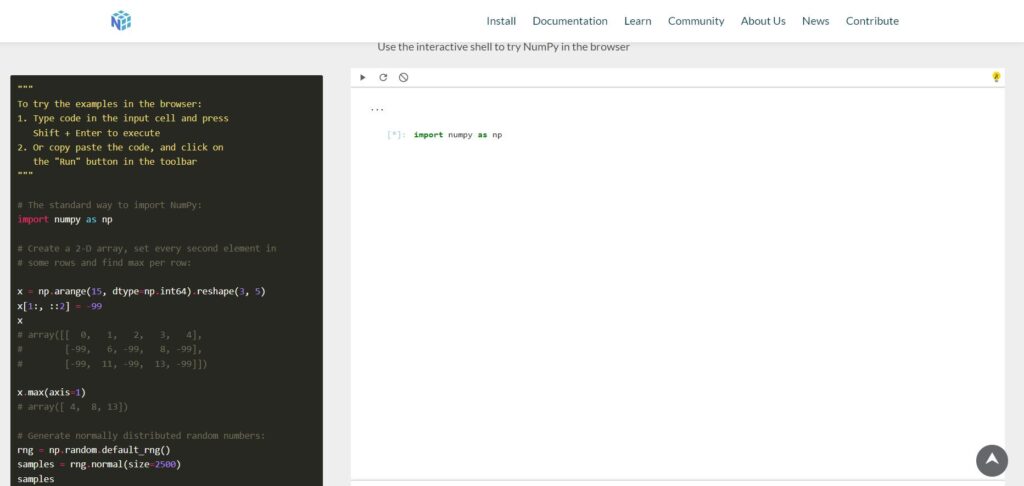

NumPy

NumPy is an open-source Python numerical computing library. It contains useful tools for data manipulation like reshaping, flattening, stacking, hstack, vstack, dstack, etc. It contains useful tools for integrating C/C++ and Fortran code. It enables fast linear algebra, Fourier transform, and random number generation.

Features

- Efficient arrays: NumPy provides efficient data structures for storing and manipulating numerical data arrays, including multidimensional and structured arrays.

- Mathematical functions: NumPy provides a wide range of mathematical functions for working with arrays of numerical data, including linear algebra, Fourier analysis, and random number generation.

- Broadcasting: NumPy provides a powerful feature called broadcasting, which allows for efficient computation of operations between arrays of different shapes and sizes.

- Integration with other libraries: NumPy is often used with other Python libraries for scientific computing, such as Pandas and Matplotlib.

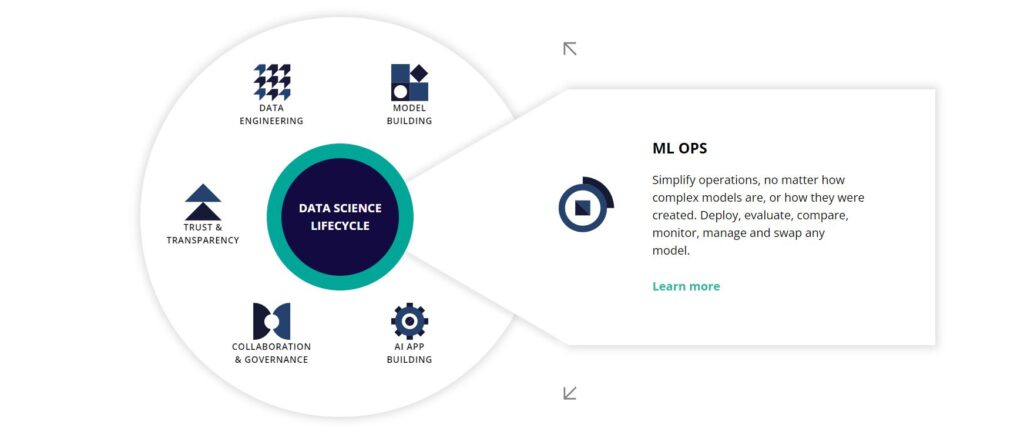

RapidMiner

RapidMiner is a popular data science end-to-end machine learning platform that supports the entire machine learning workflow. It is available in both free and commercial editions. The free edition supports up to 10GB of data and includes all core functionality. The commercial edition has additional enterprise-grade features.

Features

- Visualization: RapidMiner provides tools for visualizing data and model results, including charts, graphs, and heat maps.

- Data preparation: RapidMiner provides tools for data cleaning, transformation, and feature engineering, including handling missing values, removing outliers, and normalizing data.

- Modeling: RapidMiner provides a wide range of modeling techniques, including regression, classification, clustering, and time series analysis.

- Integration: RapidMiner can be integrated with other data science tools, including R and Python, as well as several popular databases and data sources.

R Studio

R Studio is an open-source integrated development environment (IDE) for the R programming language. It is highly extensible through plugins, add-ins, and packages developed by the wider community. It facilitates collaboration through project sharing, version control integration (Git only), interactive data views, commenting, etc. Projects can be shared on GitHub or deployed as shiny apps.

Features

- Data analysis: R Studio provides tools for data analysis, including statistical methods, machine learning algorithms, and time series analysis. These help in debugging complex codes and improving performance.

- Visualization: R Studio provides tools for visualizing data, including charts, graphs, and interactive dashboards.

- Reporting: R Studio provides tools for generating reports and presentations, including integration with tools like LaTeX and Markdown.

- Integration: R Studio can be integrated with several other tools for data science, including Git, Jupyter Notebooks, and Shiny.

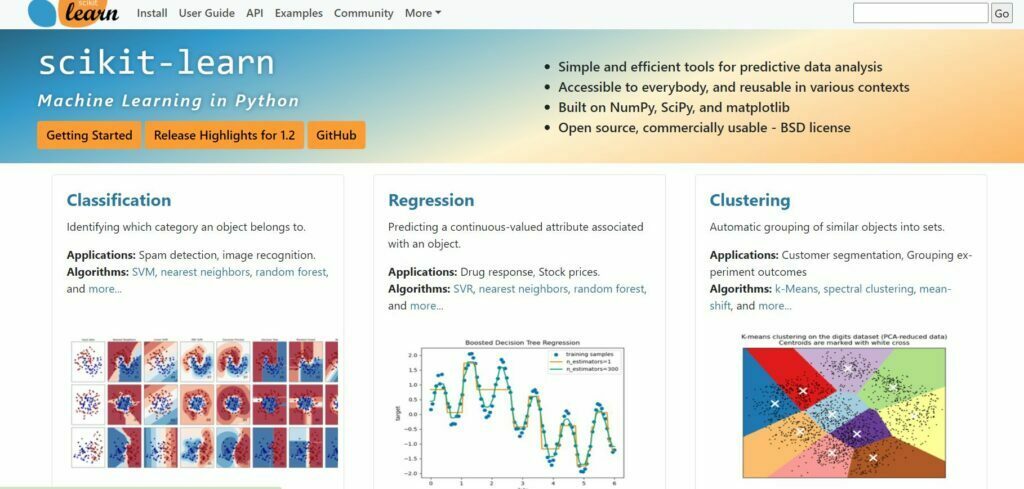

Scikit-Learn

Scikit-Learn is a free, open-source machine-learning library for Python. It provides tools for data preprocessing, feature selection, and end-to-end model selection. It contains a collection of pre-processed datasets and utility functions to load them. It is integrated with other Python libraries like NumPy, SciPy, Pandas, Matplotlib, etc.

Features

- Consistent interface: Scikit-Learn provides a consistent interface for working with different machine learning algorithms, making it easy to switch between different models.

- Integration with other tools: Scikit-Learn can easily integrate with other Python tools for data analysis and visualization, including Pandas and Matplotlib.

- Easy to use: Scikit-Learn is designed to be easy to use for both beginners and experts in machine learning, with simple and intuitive APIs.

Talend

Talend is a commercial data integration platform that provides tools for data profiling, cleansing, and standardization.

Features

- Data profiling: Talend provides tools for profiling data, including identifying data quality issues, detecting duplicates, and analyzing data patterns. It supports efficient and fast integration of data from multiple sources.

- Data cleansing: Talend provides tools for cleansing data, including handling missing or incorrect data, standardizing data formats, and validating data against predefined rules.

- Integration with other tools: Talend can be integrated with other data science and analytics tools, including R and Python.

Types of Data Preprocessing Tools

Each category of tools performs specific operations to prepare data for analysis, modeling, and insights. These tools make data more coherent, useful, and impactful by enhancing quality, converting formats, combining sources, and compressing volume. Selecting tools based on needs and using them judiciously leads to better data science projects. Here are they –

- Data Cleaning Tools: These tools perform operations like fixing errors, removing noise and inconsistencies, handling missing values, etc. They improve data quality and accuracy. By cleaning dirty or corrupted data, modeling and analytics can be done effectively.

- Data Transformation Tools: These tools convert data into formats suitable for a task like classification, regression, clustering, etc. They perform one-hot encoding, label encoding, normalization, log transformation, etc. Tools are Pandas, NumPy, etc. By transforming features, models can learn relationships and make accurate predictions.

- Data Integration Tools: These tools combine data from multiple, heterogeneous sources into a unified format. They facilitate joining, merging, reshaping, and blending datasets while preserving meaning. By integrating siloed data, comprehensive insights can be gained, and key business questions can be addressed.

- Data Reduction Tool: These tools compress data into lower dimensions by removing redundancy and extracting key information. Techniques include feature selection, dimensionality reduction, summarization, etc. By reducing volume while retaining relevant attributes, data becomes more compressible, visualizable, and useful for modeling and gaining new knowledge. Computational efficiency and model accuracy also improve.

Some key features to consider in data preprocessing tools:

- Ease of Use: The tools should be user-friendly with simple interfaces and workflows. No complex coding or statistics knowledge should be required to utilize the features. Easy-to-use tools are more accessible and productive.

- Flexibility: The tools must support different preprocessing techniques and allow multiple techniques to be chained together. They should handle both structured and unstructured data. Flexible tools cater to diverse data needs and preferences.

- Scalability: The tools must scale to large datasets and high-volume processing. They should optimize memory usage and processing time. Scalable tools can handle gigabytes and terabytes of data efficiently.

- Compatibility: The tools should be compatible with other tools and workflows used in a data science project. They must integrate well with datasets, ML libraries, databases, etc. Compatible tools provide a seamless experience and reduce friction.

- Cost: The cost of using the tools, including licensing fees, subscriptions, and maintenance costs, must be affordable and compatible with budgets. While free and open-source tools may lack features, paid tools should provide good value for the money. Cost-effective tools are accessible and sustainable for most organizations.

In summary, preprocessing tools should enhance and simplify your work, not complicate it. Choose high-quality, easy-to-use solutions that match your skills and goals. And keep your overall objectives in mind as you select, learn, and utilize these tools. You can optimize your data and machine learning models with the right tools and approaches.

FAQs

What is data preprocessing?

Data preprocessing is the process of cleaning and transforming raw data into a format that is suitable for analysis. It involves a series of steps, such as cleaning, transformation, integration, and reduction, to ensure the data is accurate and reliable.

Why is data preprocessing important?

Data preprocessing is important because it helps improve the accuracy and quality of data analysis. By removing irrelevant or duplicate data, transforming data into a consistent format, and resolving errors, data preprocessing helps to get reliable results.

What are the different types of data preprocessing tools?

There are several types of data preprocessing tools, including data cleaning, transformation, and integration tools.

What features should I look for in data preprocessing tools?

When choosing a data preprocessing tool, looking for features such as ease of use, flexibility, scalability, compatibility, and cost is important. The tool should be easy to use and provide a wide range of preprocessing capabilities. Compatibility with existing tools and systems is also important, as is cost-effectiveness.